Lessons Learned in Testing a UI Test Automation Tool

At my company we develop a user interface (UI) test automation tool. It has a graphical user interface and needs testing, just like any other piece of software.

From day one of development, we started testing it. We were tight on resources and had to move fast. We knew how to test under such constraints, but we didn’t know how to test a tool for automated testing. Who shaves the barber?

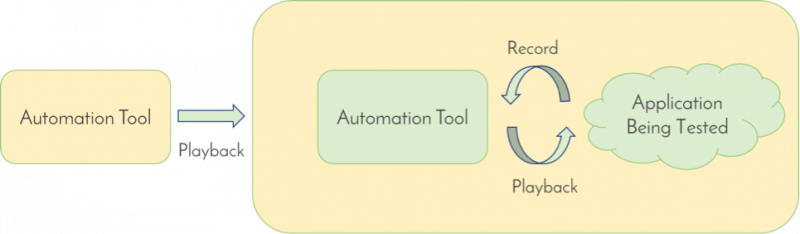

Imagine a tool that intercepts keyboard and mouse events to record the user’s behavior. Now think of the same tool executing an automated test that generates keyboard and mouse events to replay user actions. Is it possible for the test to emulate user input and control another instance of the tool to automatically record and play another test?

We quickly abandoned this idea as too complex and prone to error. Things could go wrong at many different points: while intercepting emulated keyboard and mouse events, while connecting to the same browser with two copies of the tool, or anywhere in mobile testing. Regardless of the risks, was it even the right way to proceed? We searched for other methods and came up with a few ideas that performed well.

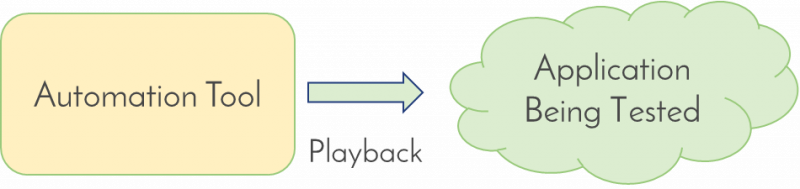

As we were tight on resources, we searched for the most efficient approach, which, in our case, was simply writing playback tests. Our tool includes a code editor and debugger, and we developed 90 percent of the tool using the tool itself. Therefore, by writing playback tests, we manually checked the recording module, editor, and debugger and automatically checked the playback module by running the tests.

We created artificial applications that contain various types of UI controls and log user behavior into a file. After running a test, we can just compare the actual and expected logs to determine whether the test passed or failed.

For some time, we considered such tests our regression and acceptance tests, and it was good enough. Over time we added support for many UI controls in different kinds of applications, and we encountered a problem of maintaining consistent API. We started using static code analysis tools and mocking techniques, and we created tests that run on third-party production applications, mainly CRM and ERP systems.

After doing many types of testing, we decided to reconsider the approach with end-to-end testing. This time it turned out to be possible. We now have tests that automatically record and play back another test running in the same application.

We did it. Why was it not possible at the beginning of our journey but then became feasible after some time? Of course, one answer is that we became more experienced. But I think it also happened because the tool reached a certain level of maturity. When an application is stable and well tested at the unit and module level, it becomes ready for UI test automation.

Like the tale about the hare and the tortoise, slow and steady wins the race.